ECE 225A: Probability & Statistics for Data Science

Instructor: Prof. Alon Orlitsky

The course covered a wide range of concepts in Probability and Statistics. It not only introduced me to new ideas but also highlighted the power of probabilistic reasoning and theoretical understanding. There are many concepts that I wasn’t aware of before. To highlight a few:

- The concept of Memorylessness in Geometric and Exponential distributions and its applications.

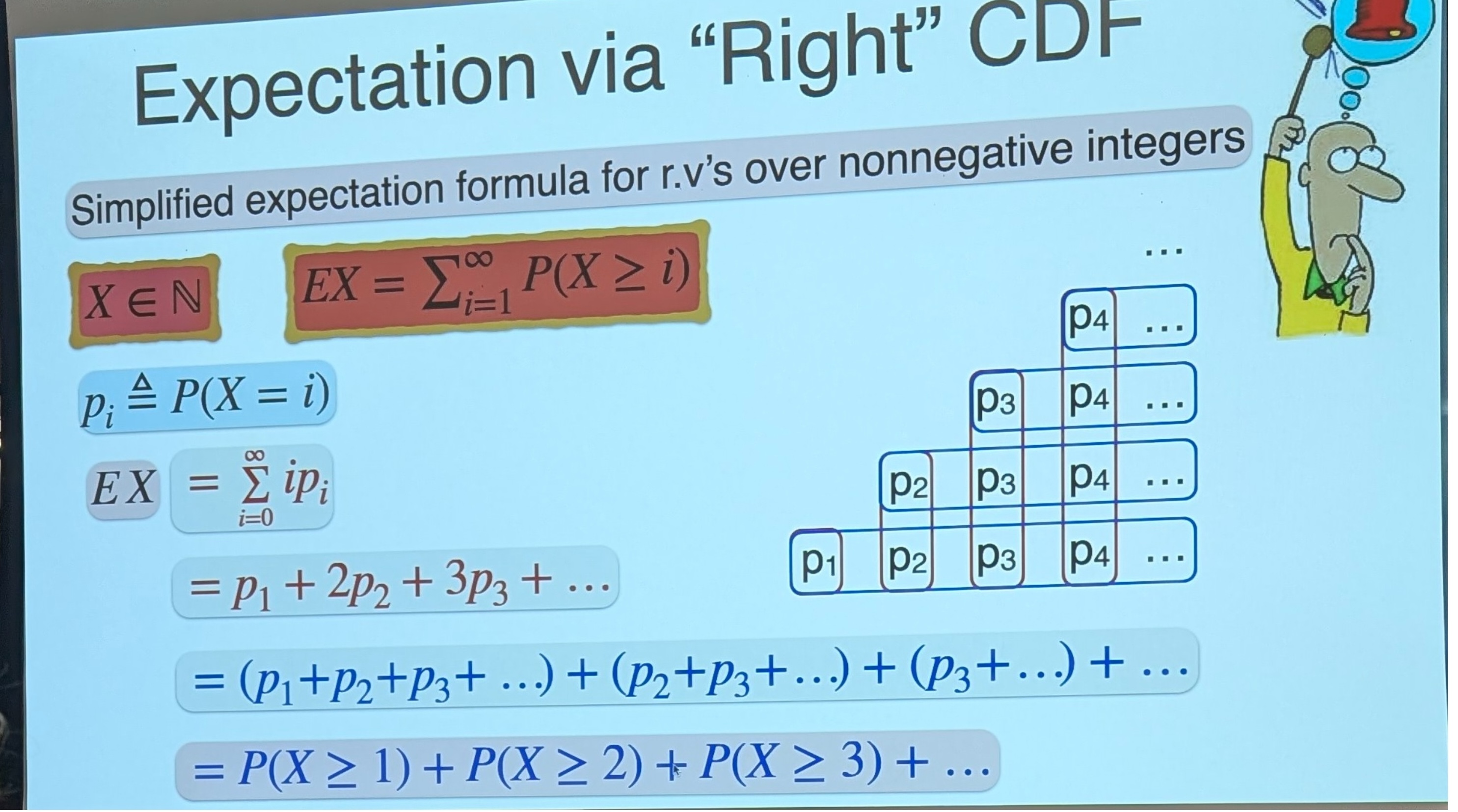

- The Right CDF approach to calculate expectations of a distribution.

- Normal distribution approximates the Binomial Distribution.

- WLLN (Weak Law of Large Numbers) is a great generalisation of the CLT.

- How weak bounds like Markov can be used to derive strong bounds like the Chernoff bounds.

What I liked the most about the course was that the concepts were coupled with real-world applications. For example, we discussed the question "why we should vote?" using probability, along with analogies that helped understand the concepts better, such as understanding the reciprocity of proximity using Newton's first law. What made the class even more special was Prof. Orlitsky using memes and cartoons to explain varied concepts in an engaging and interactive manner. Prof. Orlitsky really puts in a great effort in his slides and presentation.

Overall, it was a great course to pursue as a Data Science major. The concepts and mathematics really help when reading research papers in ML/DS.

Fall 2024

CSE291h: Advanced Data-driven Text Mining

Instructor: Prof. Jingbo Shang

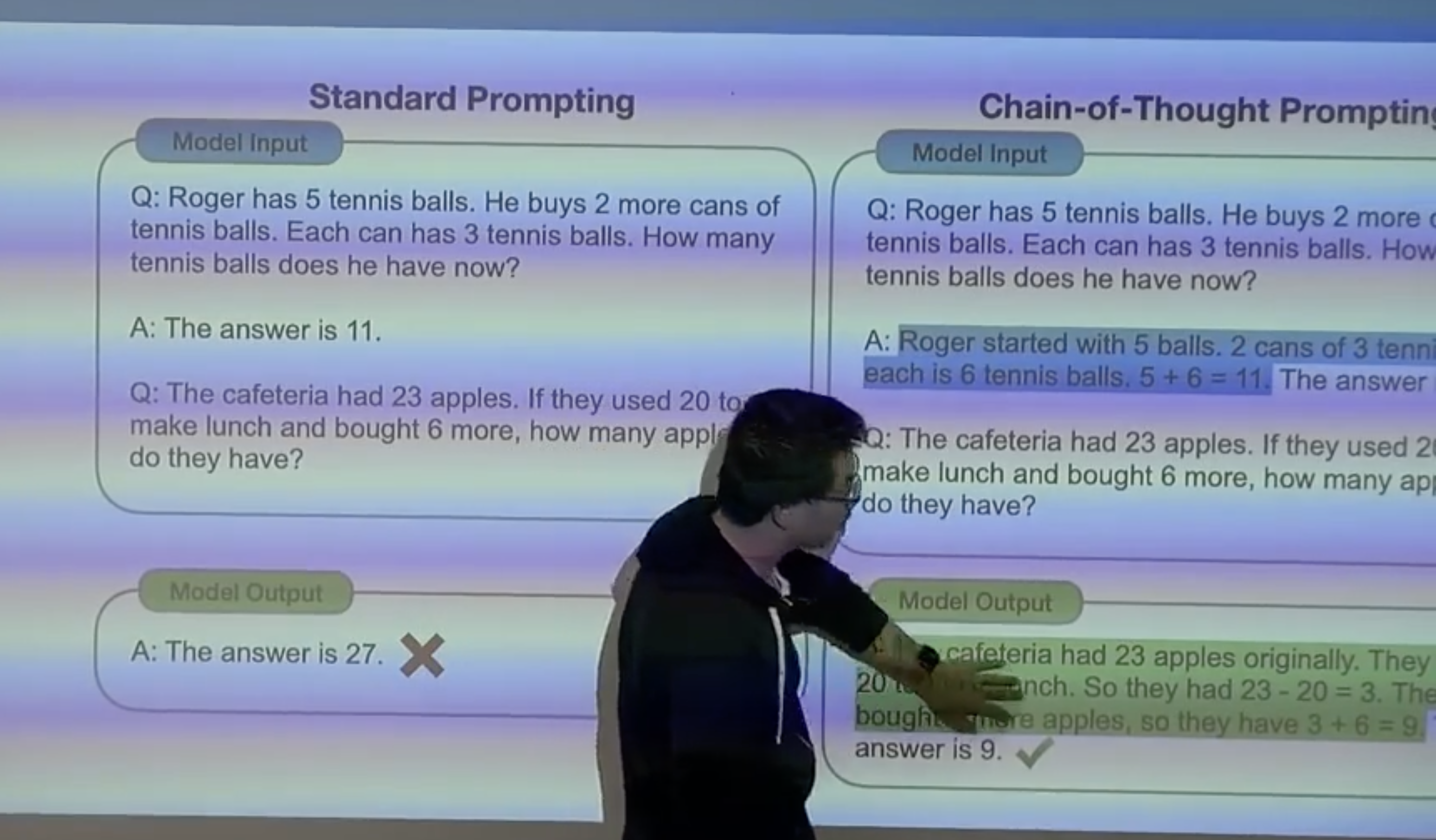

This course covered a wide range of NLP topics, starting with foundational concepts such as frequency-based word embeddings, and progressing to prediction-based embeddings (Word2Vec, GloVe), language models (including neural language models), and their respective advantages and limitations. The course also delved into Sentiment Analysis, Information Retrieval, and LLMs in fair detail, providing a solid foundation for understanding these advanced topics.

One of the highlights was learning about the professor’s own work in Phrase Mining, which enhances feature representation and improves textual understanding. This included an exploration of three methods for phrase mining: supervised, unsupervised, and weakly/distantly supervised learning, a new concept for me. As part of the assignments, I applied techniques such as SegPhrase (weakly supervised, using manually annotated labels) and AutoPhrase (distantly supervised, leveraging existing knowledge bases like Wikipedia) for mining phrases.

Additionally, the professor organized a graded Kaggle competition focused on multi-class text classification. This was a challenging and intensive experience, where I experimented with various embedding and modeling techniques to achieve a strong score. Overall, I found this course highly beneficial as a Data Science major. While it doesn’t focus directly on the latest hot topics like LLMs, it provides a robust understanding that is essential for working with these models in the future.

Fall 2024

DSC260: Data Science Ethics & Society

Instructor: Prof. David Danks

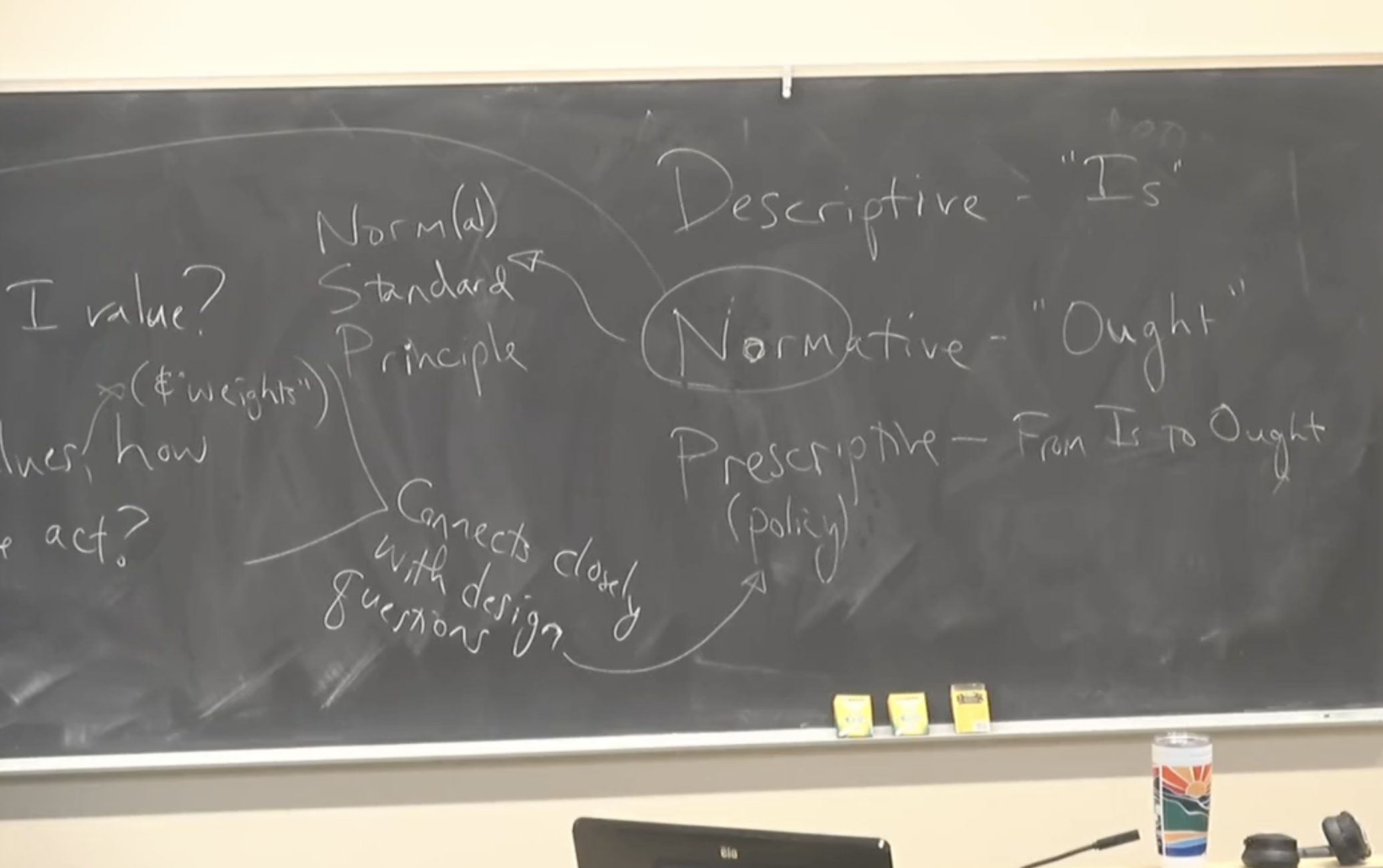

Prof. Danks' classes are incredibly interactive, and his charisma makes even the most complex concepts easy to understand. He simplifies difficult topics by using relatable analogies, which helped me grasp the material better.

The course covered a wide range of topics, including values, stakeholders, consent, algorithmic-justice, bias, privacy, and explainability. We also explored societal practices such as delegation, organizational incentives, and accountability, as well as governance mechanisms, including law, regulation, and norms.

These ethical concerns often go unchecked in data science projects. It is common for practitioners to simply source datasets from the web and use them directly in their work without fully understanding the potential ethical implications. This course has made me realize the importance of being aware of these issues when conducting data science and asking critical questions like:

- In what ways should companies be allowed to use the data they collect?

- How do these ethical concerns manifest at different stages of a data science effort, such as data collection, modeling/analysis, deployment, and usage?

- What societal-level issues arise when AI systems are used on a large scale?

- Who should be responsible when an algorithmic subject is at the mercy of decisions made by AI systems?

Fall 2024

DSC291a: Interpretable & Explainable ML

Instructor: Prof. Berk Ustun

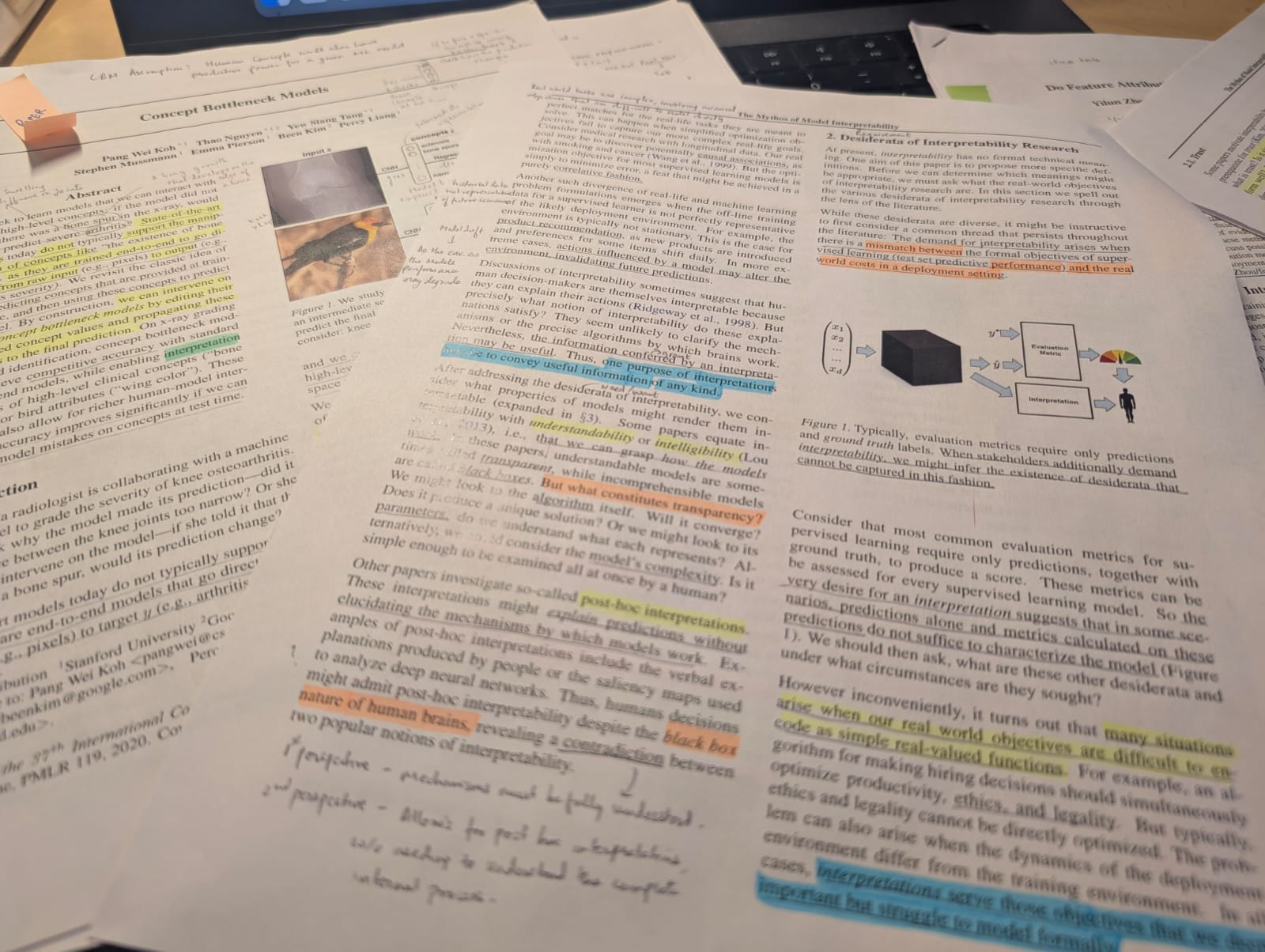

This course introduced me to a new way of learning, emphasizing thorough reading of research papers and rigorous brainstorming and critique during class discussions. It was both intellectually stimulating and challenging.

We explored 17 papers spanning the domains of interpretable machine learning (IML) and explainable machine learning (XML), including case studies and user studies on the practical implementation of IML in organizations. The course began with foundational IML literature, covering key methods such as decision trees, decision sets, and Concept Bottleneck Models (CBMs). It then transitioned to XML techniques like LIME, SHAP, and saliency maps, examining their advantages, limitations, and applicability.

Key Takeaways:

- Model size as a proxy for interpretability can be misleading: For example, larger decision trees may sometimes be more comprehensible due to the inclusion of more informative attributes that make them intuitive for specific users and contexts.

- The Rashomon Effect and predictive multiplicity: These concepts highlight how multiple models can achieve similar performance while differing significantly in structure and feature sets, impacting model selection, validation, and post-hoc explanations.

- Purpose-driven interpretability: Interpretability in machine learning should align with specific use cases, such as debugging, actionable recourse, personalization, or feature selection.

- Contextual evaluation of explanation methods: The success of an explanation method depends on the use case and the chosen success metrics. Explanation methods should balance sensitivity to both model parameters and data while maintaining robustness and fidelity.

- The myth of the interpretability-performance tradeoff: Contrary to common belief, the tradeoff between interpretability and performance is often overstated.

- Post-hoc explanation limitations: Post-hoc methods rely heavily on approximations, making it crucial to evaluate their reliability critically.

Although this course was time-intensive, it was incredibly rewarding. I enjoyed exploring the breadth of IML and XML, and I’m confident the ideas and concepts I learned will prove invaluable when applying machine learning in practice.

Fall 2024