Sharing my Reflections on the courses I took as a Data Science major (MS) student at UCSD

CSE257: Search & Optimization

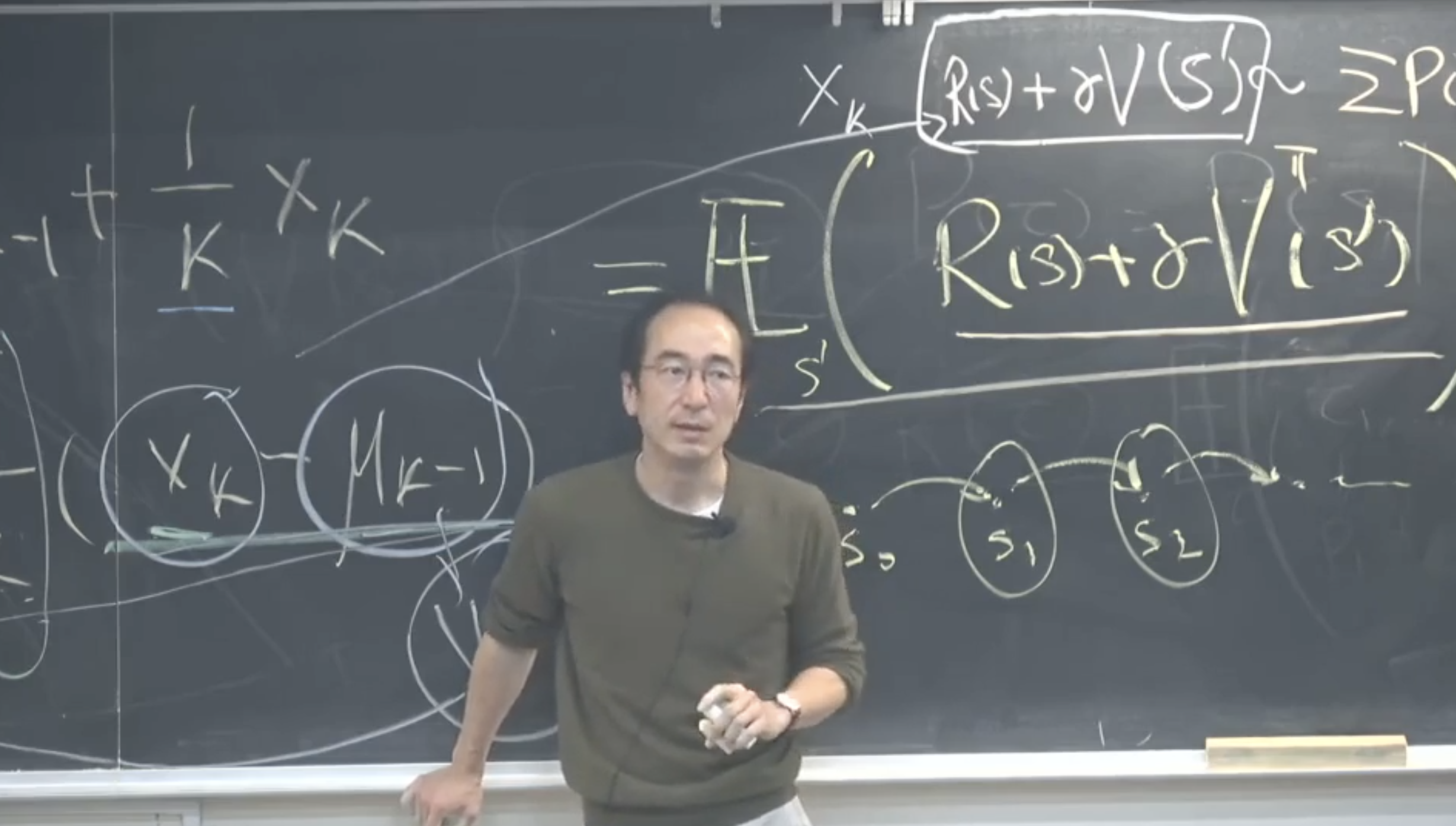

Instructor: Prof. Sicun Gao

This course was a standout experience in my Master’s at UCSD. Prof. Sean Gao’s elegant teaching style made optimization and reinforcement learning truly engaging. What I liked the most about Prof Gao is the time he spends to address each and every doubt of the students.

The course delved into the following major themes:

- Numerical Optimization: Learned basics of optimization like the first and second order necessary and sufficient conditions for optimality, algorithms like the Gradient Descent and its variants including the exact line search, Newton direction, etc.

- Stochastic Optimization: These include techniques involving random walks like the SANN (Simulated Annealing), sample based methods like CEM (Cross Entropy Methods), and Search Gradient (similar to Policy Gradient).

- RL: Learned about zero-sum game algorithms like minimax and expectimax and how to formulate any given problem as an MDP via core elements like <State space, Action space, Rewards, Transition model, discount factor>. The Prof talked about the Value iteration and the Policy Iteration algorithms. Model-free approaches to learning or estimating values such as: TD (Temporal Difference) policy evaluation, MC (Mnote Carlo) policy evaluation and Q-learning were covered in great detail.

- Regret Analysis and MCTS: Learned about the analysis of regret for stochastic policies like: ETC (Explore-then-commit), epsilon-greedy and UCB (Upper Confidence Bound), all of which incur-sublinear regrets. Learned to use UCB for online search algorithms like the MCTS.

Few key-takeways to remember:

- Policy is not an element of the MDP. Its just an agency that the agent uses to navigate the environment.

- Why do we need discounting?Without discounting, the sum of future rewards is potentially infinite. How will we know which policy is better if both the policies being compared yield an infinite return. In RL, all we care about is learning a good polcy.

- Bellman update is a contraction mapping, which has a unique fixed point (aka singularity).

- Why we learn evaluations and policies? We dont know the MDP (specifically the transition model of the environment i.e. P(s' | s, a)) in reality. Hence, we use sampling based methods like MC or TD for policy evaluation. Note, TD is online and incremental, whereas in MC (offline) policy evaluation we need to wait for the entire sequence of actions to happen to calculate the return (It is not utilizing the forms of Bellman equations, hence missing opportunities for updating values on the fly). MC, TD are about evaluating the state values based on a fixed policy.

- In reality, no one gives us the policy, we need to figure that out and one of the ways is epsilon-greedy approach.

- Q-learning is off-policy (because of the max). Here, the Q-values are only reliable when we visit each state-action pair a large number of times. Deep-Q learning is just replacing Q-tables with a NN.

- We should always check for action space first in practical problems. In continuous action spaces, Q-learning becomes less useful because max_a Q(s, a) becomes an optimization problem in itself. Hence, we should check if its possible to discretise the action space.

- Why we dont hear much about Q-learning in finetuning-LMs? Q-learning attemps a global optimization in the sense that we form an optimal policy by knowing about the entire space of state, action pairs (uses max). When we have limited samples as when finetuning-LMs, we want things to be local and this is where policy-gradient chips in. Policy gradient is on-policy.

- Numerical Optimization only provides us a local view or notion of optimization. In high dimension there can be exponentially many neighborhoods to explore and techniques like gradient descent wont help. Setting gradient equals 0 will lead to exponentially many solutions.

- Stochastic optimization methods give us a global view of optimization and in most cases are guaranteed to converge (find a global solution).

Winter 2025